So last week I wrote a post about how to build and configure a Raspberry Pi cluster to make use of MPI – the Message Passing Interface for parallel computing.

Today I’m going to show my initial results from testing it’s performance characteristics. If you followed my previous post, you’ll know that I constructed my Pi Cluster from the new Raspberry Pi 2 boards. This board is a big step up from the previous board in compute terms: it’s powered by a 900MHz quad-core ARM Cortex-A7 CPU, as opposed to the 700Mhz, single core processor in the earlier Model B Pi. There are other differences too (double the memory for example), but this shows that for simple workloads that are bounded only by compute power, the new Pi should provide a good engine.

Having built and configured the cluster with mpich2 and mpi4py, as in the earlier post, the only other change I made was to run NFS on one of the pi’s, and to mount an exported share across all of the cluster nodes (including the NFS server itself) so that source files can be easily shared across the cluster, and with identical file-locations/paths, something which appears essential in order for mpi4py to operate.

I based my testing upon one of the simple demo programs that comes with mpi4py – appropriately enough a tool for calculating the value of pi in a distributed fashion. You can find the calculation in the mpi4py-1.3.1/demo/compute-pi folder.

mpi4py comes with 3 different implementations of this calculation, each sharing workload across the cluster using different MPI techniques.

I took just one of these, cpi-cco.py, which operates using Collective Communication Operations (CCO) within Python objects exposing memory buffers (requires NumPy), and made some trivial changes to remove the looping so that each execution of the program only calculates Pi once, and to allow the number of iterations to be specified on the command line as a parameter. This allowed me to simply use the linux time command to measure the execution time. You can retrieve a gist of the modified file here and execute the program thus:

~# time mpiexec -n 32 -f /mnt/shared/machinefile python /mnt/shared/cpi-cco.py 1000

pi is approximately 3.1415927369231271, error is 0.000000083333334

real 0m2.173s

user 0m5.980s

sys 0m1.060s

In this example I have shared the /mnt/shared folder across all nodes in the cluster, and as in the previous post, the file machinefile lists the cluster-members, and the -n parameter specifies the number of processes to use.

Results

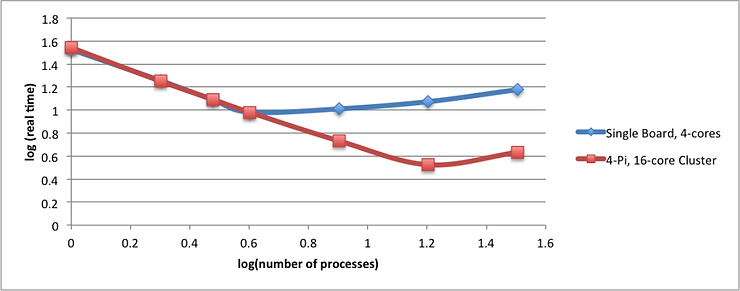

For the experiment itself, I chose an iteration count of 1 million, and ran the tests twice – once without the machinefile parameter (and thus constrained to the single board), and once with the cluster fully utilised.

The performance improves as long as there are spare cores available – whether on the single board, or across the cluster.

For this simple example, with load characteristics that are not throttled by memory or network IO, and with consistent behaviour and processing-cost per process, it’s no surprise to see an excellent fit with the straight-line log scaling expected, with a breakaway happening only once all available cores are utilised.

Follow-up note:

The other extraordinary thing I forgot to put in the post was the performance uptick from the Pi Model B to the Pi 2. Running the pi-calculation with 100,000 iterations, and a single process took 14.3secs on the old Model B, but on the new Pi 2 it took just 4.1 seconds. That’s pretty remarkable, and is not a feature of any parallel processing – it’s simply the speed of the Pi. I exaggerate a little, since I can’t confirm the speed/performance of the SD storage attached to the old Model B, but I can’t see that that should make a material difference. The Pi 2 is a faster processor, but only 35% faster; the new Pi has double the memory, but neither factor could easily allow for the massive speed-up. Neither Pi was heavily loaded at the time of the test, so I suspect it is simply the burden of running the OS and the test on a single core really causes the old Model B to struggle. The new Pi really is an impressive performer.